Claude Shannon: information icon

Early theoretical solutions led to practical applications that continue to power our digital world, while his inventions spanned the spectrum from playful to paramount.

Enlarge

Enlarge

Claude Shannon (B.S. 1936, B.S.E.Elec ’36, Hon. D.Sc. ’61) reached into the guts of the computer and calibrated its settings to suit the math equation that a visiting researcher wanted to solve. Shannon adjusted gears, switches, wheels and rotating shafts, walking from one end of the machine to the other.

The computer filled the whole space with its 100-ton heft; required 2,000 vacuum tubes to function, along with thousands of electrically operated switches and 150 motors; and mechanically calculated solutions in a record time of several hours. The complicated device was one of the first operating computers in existence.

Shannon couldn’t get enough of working with its bulky array of circuits. As a boy growing up in Gaylord, Michigan, his idea of fun was building a telegraph system out of barbed wire between his and a friend’s house half a mile away. He finished high school at 16 and graduated from the University of Michigan when he was 20 with a double major in math and electrical engineering.

In his senior year, Shannon found an ad on a campus bulletin board for a work-study job – which was how he would end up oiling the gears of the huge computer at MIT after graduation, helping in the lab as a research assistant while working toward his master’s degree.

The giant gadget thrilled Shannon, but he craved a better solution to replace the laborious calibrations and trial-and-error design of its circuits. He found his answer in philosophy.

To Shannon, the switches in the computer resembled the binary logic he learned about in the philosophy course he took at U-M to meet his liberal arts requirement. Open and closed circuits reminded him of the true and false variables in Boolean algebra, which had come up in class.

Shannon figured he could apply the ideas to simplify circuit systems – all he had to do was invent a new kind of math. He’d base his new math on the logical principles of Boolean algebra and the binary language of ones and zeros, and he’d use straightforward equations to design on paper the simplest, cheapest circuits before even needing to start messing with wires and hardware.

Shannon’s insight became his MIT master’s thesis, which he published formally in 1948. These ideas became the basis of all digital technology today.

Turn Up the Volume, Turn Down the Noise

In the 1800s, long before we buried them underground, telephone wires formed thick curtains above sidewalks and city blocks. Those bulky wires transmitted conversations between callers by converting someone’s voice into electrical signals. Sound waves in the air translated directly into electromagnetic waves in the wires, with the waves matching as if drawn on tracing paper. That was analog technology.

As Shannon thought more about the binary variables in his new math, he realized that any information – not just computer circuits, but also phone conversations, telegraph messages, music on the radio, and television shows – could be standardized in the language of ones and zeros.

Rather than sending sound through phone lines as waves directly translated, Shannon sought to convert sound waves into a series of discrete points, as though he were drawing connect-the-dot waves. Those points would be represented as a sequence of ones and zeros, with the binary sequences sent through wires as electrical signals.

And Shannon had invented digital technology.

Engineers in the habit of building bulky transmission wires could hardly believe what Shannon was suggesting. That any type of information could be standardized and sent through the same lines? Certainly, this made for a much less cumbersome infrastructure. The long analog phone wires of the day created problems with electrical signals fading as electricity traveled farther away from the source. To that point, engineers had tried to fight telephone static with brute force by cranking up transmission power, but such techniques were not only expensive but potentially dangerous.

Amplifiers, originally intended to boost weak signals, merely amplified the mistakes in transmission that accumulated along the wires. With enough amplified errors, noise would be the only thing a person could hear on the other end of the phone line.

Shannon’s new idea to reduce noisy transmissions built upon his digital system. Instead of amplifying signals (simply turning up the volume and stretching sound waves ever larger), Shannon proposed adding signals by tacking error-correcting codes onto binary sequences (stringing on a few more ones and zeros). A receiving end could check the codes to verify whether an incoming message accrued any errors en route, fix the mistakes, and restore the original message. The tricky part would be to add extra signals without killing the efficiency of data transfer. Ideally, error-correcting codes would keep message bloat to a minimum by adding the fewest, but most informative, extra signals.

To everyone’s surprise (including his own), Shannon showed that, in theory, perfectly error-free transmission was possible – even at high speeds.

But he never quite said how.

Enlarge

Enlarge

From the Theoretical to the Practical

In the decades following Shannon’s theoretical solution, researchers toiled to put it into practice. When the NASA Voyager space probes first launched in 1977, they took off with early versions of error-correcting codes on board. The technology made it possible for the probes to transmit back to Earth their mesmerizing images of Jupiter, Saturn, Neptune, and Uranus. One of those spacecraft codes still enables us to listen to scratched CDs without the music skipping, and others help to reliably save data to hard drives. Error-correcting codes continued to grow in sophistication, culminating by 1993 in turbo code – an innovation that came the closest yet to reaching a maximum data transmission speed.

Of course, no one expected that those Voyager images from deep space would be perfect – and Shannon argued that they didn’t need to be. People don’t need every last bit of a message to understand it completely. Fr xmpl, mst ppl hv lttl dffclty rdng ths sntnc, thgh th vwls r mssng.

By exploiting redundancies in communication (such as vowels we may not need), Shannon hoped to make transmission faster and more efficient. He began by working out the statistics of the English language and its Roman alphabet. Of course, E shows up more often than Q, so Shannon opted to translate E into fewer ones and zeros to save space. U almost always follows Q, so “QU” should require fewer binary digits, and the frequent combination of “THE” fewer still.

The same principles of reducing redundancies help to stream media on a cell phone. Data streaming works because a video loads only the changes between frames and not the stationary background. An audio file mutes inaudible frequencies, and an image discards unnecessary pixels. These tolerable omissions don’t detract from the experience; indeed, they shrink file sizes to the point that cell phone networks can handle the data loads – all thanks to digital compression, another familiar part of the modern world where Shannon left his mark.

Shannon wrote a quick dissertation on the math of genetics and moved on not long after earning his Ph.D. at MIT. He spent a year at the Institute for Advanced Study in Princeton, New Jersey, and the next 15 years working at Bell Labs, where he supported the war effort by studying the theory and practice of codebreaking.

After spending so much time determining how to best transmit a message to an endpoint clearly and quickly, Shannon now sought the opposite in his work on encryption: to transmit information so that almost no one could intercept and understand it. And yet he came to the same conclusion as before. Just as perfectly clear communication is possible with error-correcting codes, perfect secrecy also is possible if you encode a message just right. Shannon wound up helping to create the encryption apparatus that Franklin D. Roosevelt and Winston Churchill used in their secret conversations across the Atlantic during World War II, a system that still hasn’t been hacked.

Enlarge

Enlarge

Press Play

Dozens of unusual unicycles leaned up against the wall in Shannon’s garage, each one an experiment. Some of them shrank increasingly smaller, as Shannon hoped to build the tiniest unicycle in the world. One had a square tire, one had no pedals, and a particularly precarious model was meant for two riders – probably the only tandem unicycle in existence. Shannon became famous for unicycling through Bell Labs hallways, practicing his balance while juggling. In his yard, he strung a 40-foot steel cable between tree stumps and found he could juggle while unicycling along the tightrope. Through the bay windows of his house outside Boston, where he eventually returned as a professor at MIT, Shannon could view the cart and track he built for his kids, winding through the yard toward the lake in back. A huge pair of Styrofoam shoes allowed him, every once in a while, to surprise his neighbors by walking on the water’s surface.

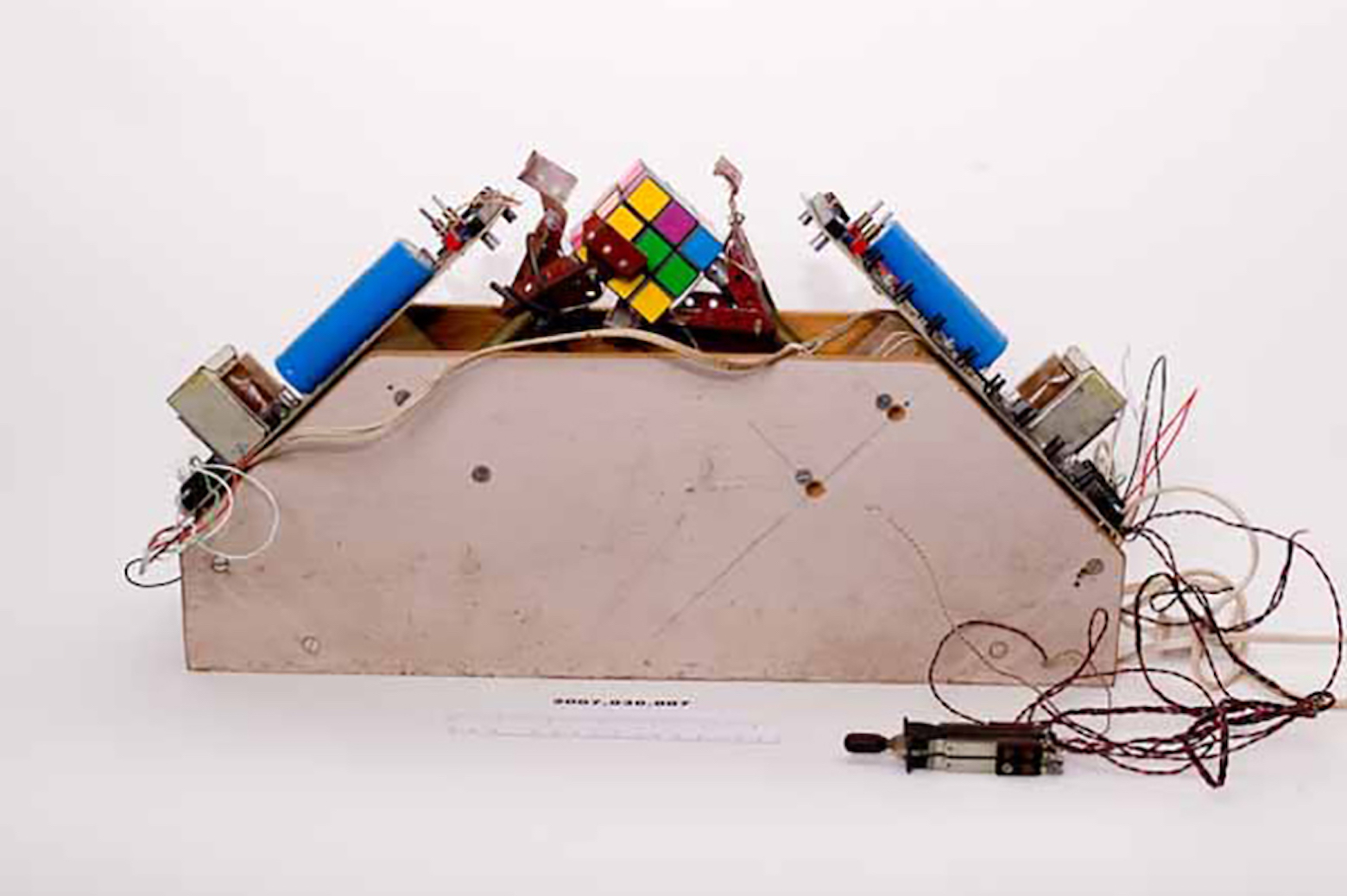

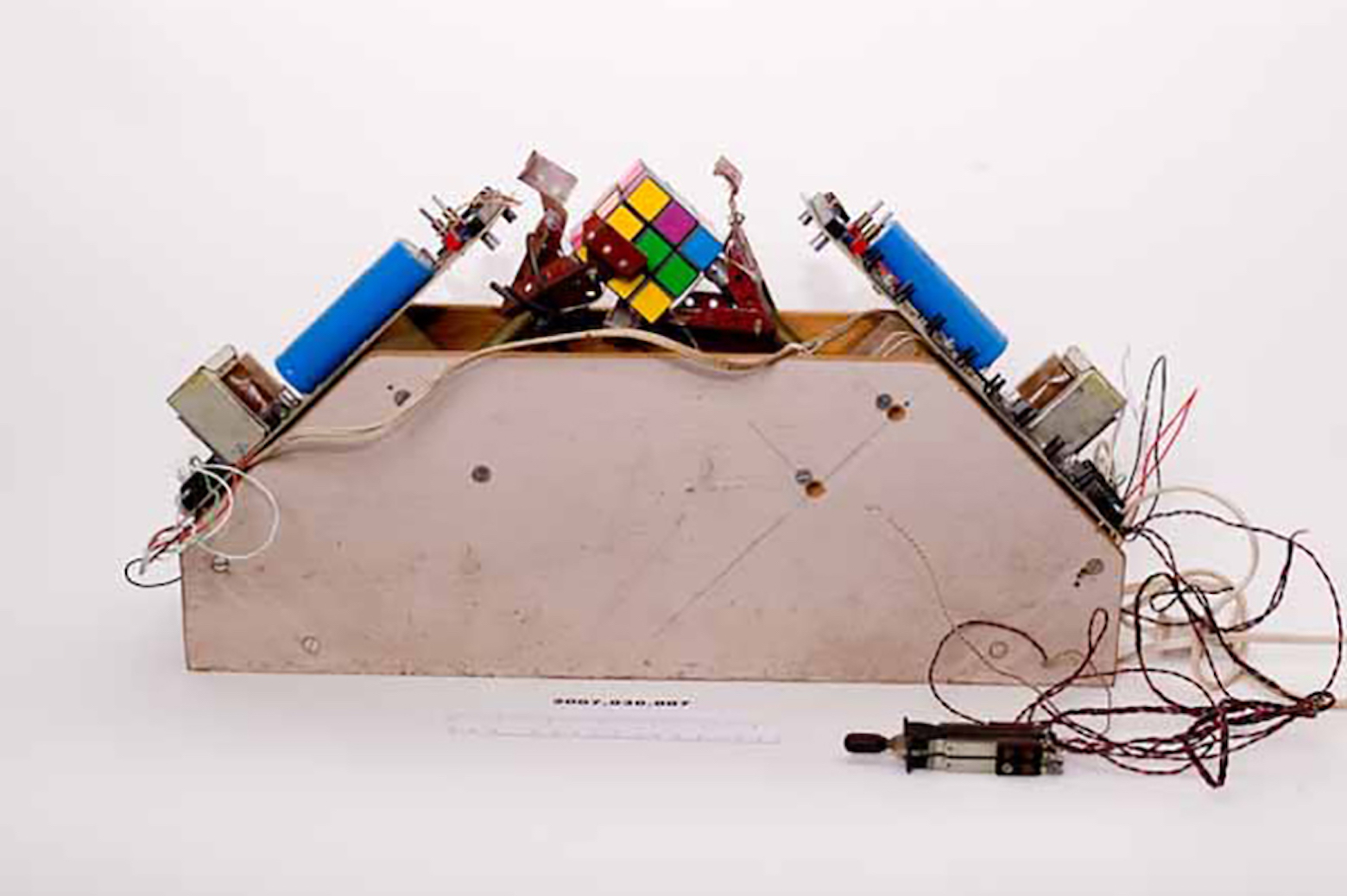

Shannon kept tinkering and inventing throughout his life, devising a flame-throwing trumpet, rocket-powered Frisbee, Rubik’s cube solver, and chess-playing robots. He filled his basement with electronics and mechanical gadgets, constructing toys from stranded components. He built a mind-reading machine that could predict with above-average accuracy whether a human coin-flipper would choose heads or tails. He also built what he called the ultimate machine: a small, nondescript box with a single switch. Flipping the switch caused a tiny hand to emerge, reverse the switch, and return to rest inside the box, its one function to turn itself off again. Completely useless – if simple, playful fun counts for nothing.

Shannon worked without concern for what his discoveries might make possible. He was motivated merely by his own curiosity – and by the joy of simple play. But though Shannon might not have cared about practical applications, his work found them.

The robotic mouse he crafted, for instance – which could find its way through a maze and remember the fastest path – helped to steer research in artificial intelligence. Shannon collaborated on the first wearable computer – a wrist device the size of a cigarette pack, wired to a tiny speaker hidden in his ear and connected to a switch inside his shoe, which Shannon learned to operate using only his big toe. (His aim was to surreptitiously predict the fate of a roulette ball, thus beating the Las Vegas casinos.) More legitimately – but drawing from the same expertise in math and circuits – Shannon hit the jackpot by applying algorithms to the stock market.

Shannon’s imaginative thinking created nothing less than the digital world – and connected all of us within it.

Shannon’s innovations remain invisible precisely because they were designed to be overlooked. Error-correcting codes, compression techniques, online encryption – these inventions work best when they pass unnoticed, embedded in digital technology.

When we make a static-free phone call, communicate instantaneously by email, listen to a non-skipping CD, pocket modern microprocessors soldered with millions of circuits, watch videos on a cell phone while riding the bus, or find photos of outer space, we don’t thank Claude Shannon.

But maybe we should.

By Elizabeth Wason, science writer with the University of Michigan College of Literature, Science, and the Arts

MENU

MENU